ACI Blog

Preventing the spread of Covid-19 with an Apple Watch and a bit of machine learning

January 18, 2021

Covid-19 has been a major disruption world-wide for the past year. A team of GVSU computer science students spent time last fall aiming to disrupt the spread of Covid-19 via machine learning and wearable technologies. Sponsored by Procter & Gamble and mentored by Dr. Venu Vasudevan, Senior Director of Data Science & AI Research, the team set out to discover how they could create a user experience for the Apple Watch that could help prevent the spread of Covid-19.

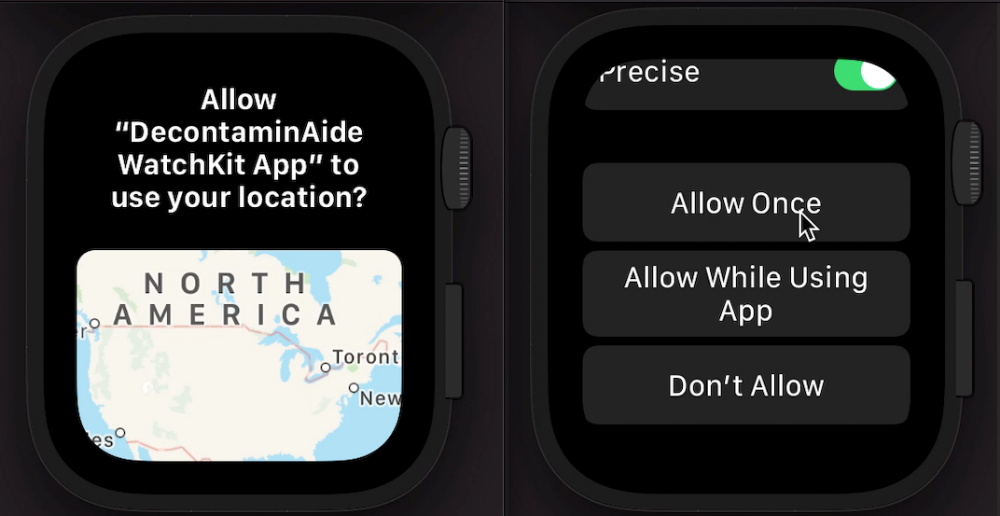

The final deliverable was a prototype Apple Watch app called DecontaminAide. DecontaminAide is designed to assist the user in monitoring everyday actions that could increase their risk of getting Covid-19. Utilizing the features and capabilities of the Apple Watch, the team designed a program to use a machine learning model to tell the user the number of times they touch their face throughout the day. A second machine learning model monitors the audio input and detects if the user is coughing or sneezing when their face is touched. In addition, the app uses the user’s location to remind them when they are leaving their home to mask up, and use the app. The overall goal of the app is to reduce the risk of getting Covid-19 by increasing awareness of common everyday habits that may inadvertently spread Covid-19.

After starting the app, the user can navigate to the page to start the tracking session. Here the app displays the count of face touches to the user, however internally the app starts collecting device sensor data. The data is sent to the Activity Classifier machine learning model and then the prediction output is determined by the model. The algorithm for the face touch detection requires getting a face touch detection probability of at least 99.9% to ensure that the app won’t detect more face touches than necessary. Also after the model detects a face touch, it takes a few seconds for the probability to settle down to less than 99% so the algorithm also considers a recent detection flag to allow the probability to settle.

The specific data that is retrieved from the sensors are device gravity, user acceleration, rotation rate and the attitude. Each of these have their respective x, y, z, pitch, roll, yaw for attitude, datasets, so there are 12 series of datasets that the machine learning model is making its prediction with. The model will take in these sensor datasets in a window of about 3 seconds. This means that when collecting data samples to train the model samples were collected in 3 second intervals. To train the model the collected 10 face touch sitting, 10 face touch standing, 10 no face touch sitting, and 10 no face touch standing for a total of 40 data samples.

“This project is an excellent example of how a small group of computer science students can take an aspirational concept provided by one of our industry partners and very rapidly deliver an innovative solution to a real world problem”, said Dr. Robert Adams, the course instructor. “This was one of 10 projects sponsored by our industry partners this past fall semester.”

The GVSU Applied Computing Institute, GVSU’s experiential learning platform for computing students, works closely with industry partners to identify meaningful collaboration opportunities. The institute is hosted by the GVSU School of Computing, and this particular project was completed by students enrolled in their senior project course. You can learn more about sponsoring a senior project here.

A demonstration of the DecontaminAide app is available.